Executive Summary

A startup used AI to translate code into a different programming language, which introduced a bug that lead to a multi-day outage.

Proper testing and deployment setups could’ve prevented this, but the startup was only a few weeks old so testing infrastructure was not a priority at this stage.

As AI-generated code becomes more prevalent, companies must focus on rigorous testing, phased rollouts, and strategic prioritization of critical systems to ensure reliability without stifling innovation.

Blindly trusting AI can cost you $$$.

It certainly did for this startup.

Their engineering team used AI to translate code into a different language. It worked for the most part, except for one hard to catch bug.

AI miswrote a line of code that hardcoded the same ID for all new users.

This resulted in a 5-day outage and at least $10k in lost revenue.

In today’s article, we dive into what happened, why it happened, and how it could’ve been prevented.

Why It Happened

First off, the reason why this bug was so catastrophic was because UUID’s (or universally unique identifiers) are expected to be UNIQUE — no two users should ever have the same ID.

If two users are assigned the same ID, the system won’t understand which user’s information it is operating on.

This assumption of uniqueness is baked into all parts of their software, which can cause outages across all services.

And this is exactly what happened with this startup.

Each user was getting assigned the exact same UUID during the sign-up flow. It would work for the very first user, but fail for every user after since that UUID was already taken.

This broke their onboarding flow, and lead to dozens of angry customer support messages before they finally resolved the issue.

History Repeating Itself

Ironically, the issue of ID collisions has happened in the past!

Github faced this same issue in 2013, when it wasn’t using a strong enough random number generator.

At Github’s scale, the system was generating high numbers of UUID’s quickly, which increased the chances of a “collision”, or when the same ID’s are generated twice.

This leads us to our first lesson: UUID generation is a Tier-1 feature that should be subject to the most thorough requirements for testing and deployment.

Engineering management should ask themselves what are the Tier-1 features within their system, and make sure they cover those first to ensure they don’t break.

Some other examples of Tier-1 features include:

payments - because otherwise you won’t get paid

authentication - if broken, users can’t log-in or use your product

onboarding - because otherwise you’re losing new customers and hurting growth

How This Could’ve Been Prevented

There are two layers to addressing an issue like this:

Preemptive - How could this issue have been detected before it reaches customers?

Reactive - How fast can we detect an issue and fix it after it’s deployed?

Preemptively, they could’ve had an automated test suite run before pushing changes to production.

If they had a test that tried to onboard two users in quick succession, this issue would’ve been caught as the second user would’ve been assigned the same ID and the software would’ve crashed.

We actually set up similar automated testing for a client recently using a framework called Playwright.

It can even simulate mouse clicks, form fill outs, and run on automated schedules, which would’ve worked for this startups’ onboarding flow.

In terms of reaction time, they could have alerting on key metrics, like signups per hour, and if it drops below a threshold, use PagerDuty to alert the engineering team.

This would’ve definitely triggered in their case.

Furthermore, it’s surprising this issue took 5 days to resolve, because if they had logged the errors or customer information and looked it up, the error messages would’ve made it clear that the ID’s were taken.

Did the Founders Not Prioritize Testing Enough?

It’s easy to blame the founders for not having deployments, alerting, and testing infrastructure.

However, they actually just got unlucky.

In their post-mortem, they point out that they’ve only been around for a few weeks!

When they are at such an early stage, it’s hard to prioritize platform stability over feature development as they haven’t yet reached product-market fit.

However, if this was a more mature company this lack of infrastructure would be unforgivable.

We saw this with CrowdStrike, a publicly traded company, where an engineer was able to push a bug into production that brought banks, hospitals, and airports to a standstill.

Second, this type of issue will become less and less common as AI improves.

OpenAI has made it clear that it is trying to improve its reasoning capabilities. Sam Altman even said in an interview once that founders should avoid trying to patch small flaws in the models, and instead bank on it improving over time.

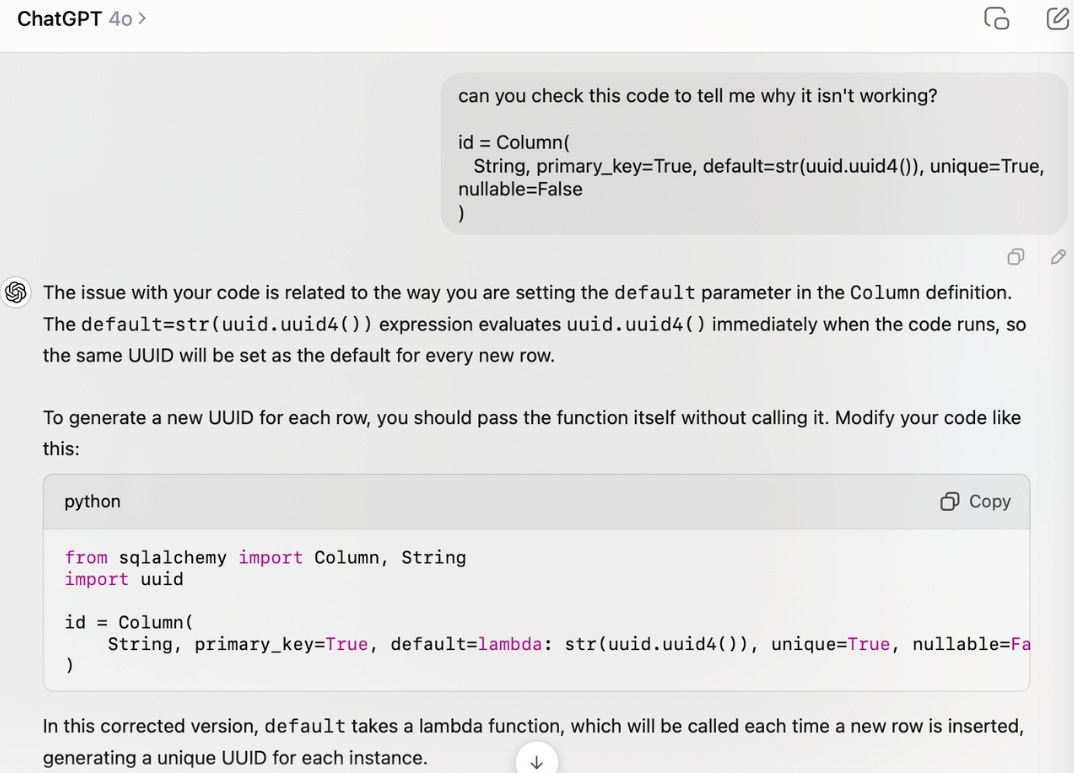

In fact, it’s clear that this was a reasoning issue, because had ChatGPT been prompted about whether there was a bug in that line, it would’ve found it!

This means AI is capable of catching these issues with chain prompting which will be built into the models as their reasoning improves.

The Growing Trend of AI-Generated Code

To be clear - this is not AI’s fault.

A bug like this could’ve been introduced by a human, passed the exact same tests, and caused the exact same outage.

This trend of using AI generated code will only increase. Google’s CEO even commented that over 25% of the code written is now generated by AI!

So rather than avoiding AI generated code, we must focus on setting up the right infrastructure to prevent issues from being introduced.

As the old saying goes:

Trust, but verify (AI-generated code).

Some questions to assess your incident readiness include:

If a bug was introduced, is there a process for rolling it back?

What is your deployment process for introducing changes (if you have one at all)?

If a bug was introduced, how can we make the blast radius smaller (for example with phased rollouts)?

What are the most critical features that we have to keep running?

If a bug is introduced, what is the worst that could happen?

Bugs are impossible to prevent, but how fast you detect and recover from them is within your control.

💡 Need Help with AI Implementations?

Feel free to contact us at our website here for a free 30-minute consultation.

If you know someone who needs help with AI, refer them to us and receive a $1,000 award.

And if you liked this article, follow me on LinkedIn for daily, revenue impacting AI content.

Our company LinkedIn page is here.