How AI Visual Search Helps Your Customers Find Products Faster

Examples from Home Depot, Pinterest, and Amazon's implementation

Executive Summary

Visual Search allows customers to use photos to search for products

The ROI is significant, as implementation times are modest, but the potential increase in orders and reduction of operations are bottom-line impacting

We recommend starting with pre-trained AI models, which are quicker and more cost-effective to implement. For more customized needs, you can explore ready-made SaaS solutions, and if needed, fine-tune AI models using your own product images.

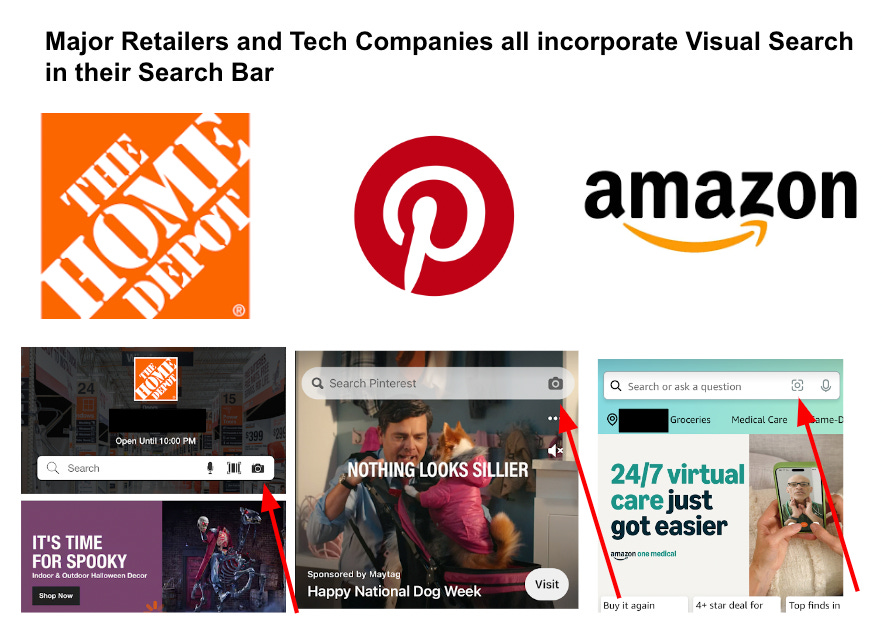

Many retailers are racing to develop AI capabilities, with AI Visual Search catching the attention of industry giants.

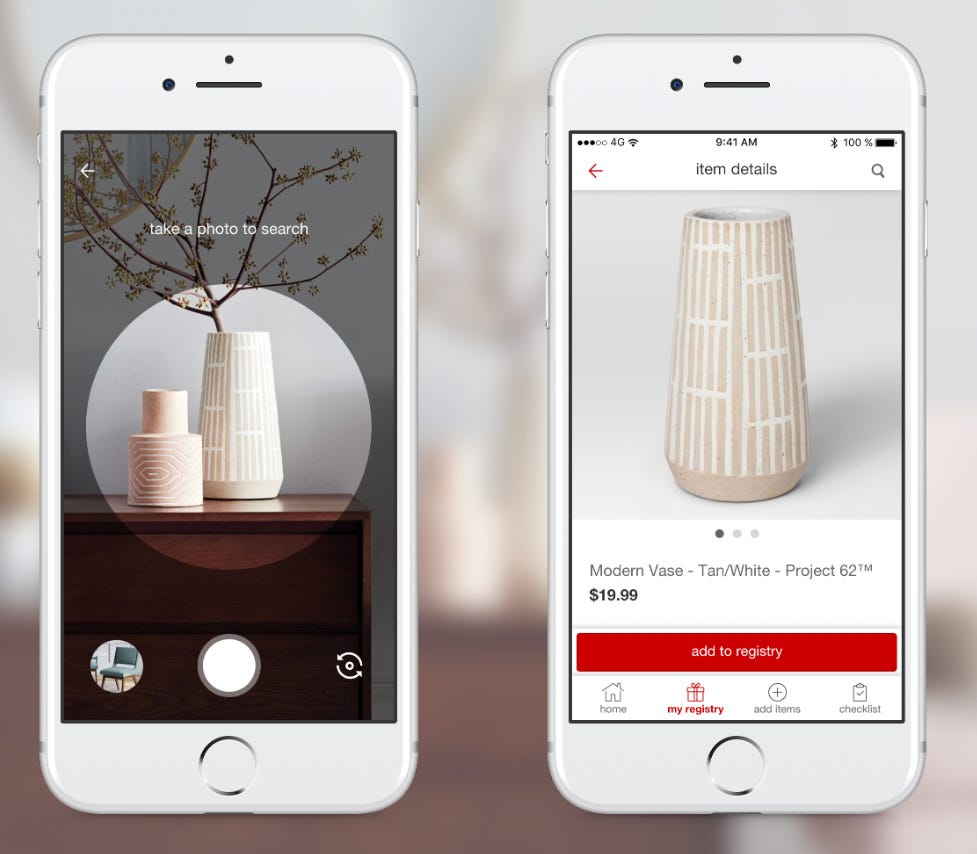

Visual Search allows customers to snap photos of products to search for the exact product or similar recommendations for sale.

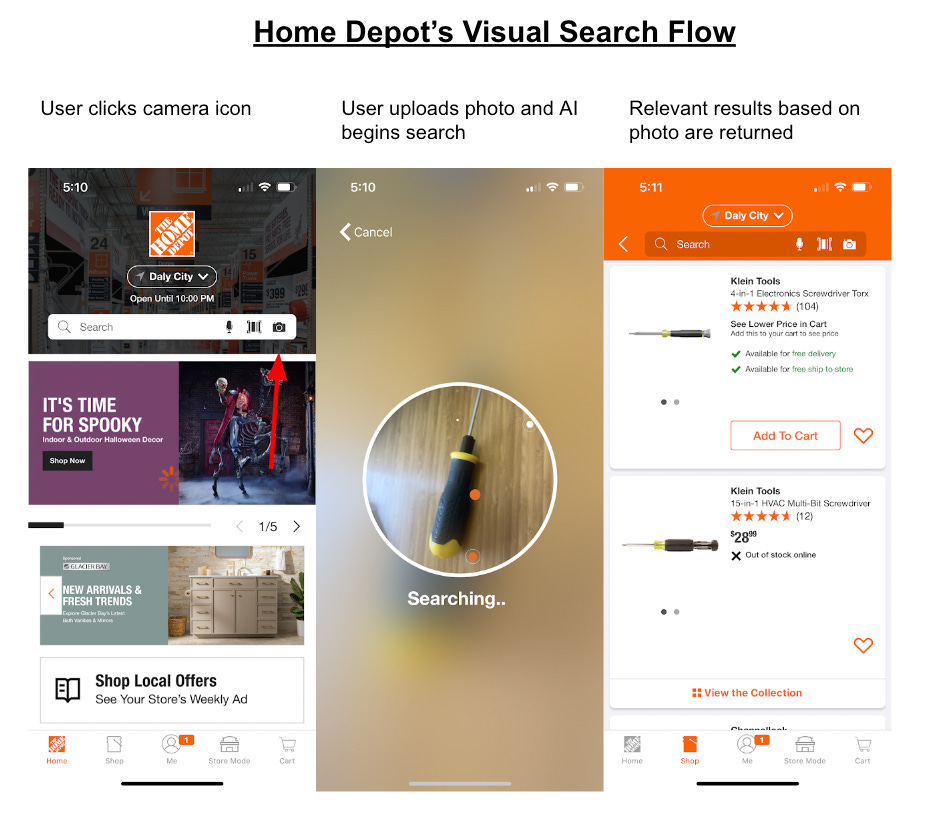

In fact, this type of functionality has the same user experience across apps:

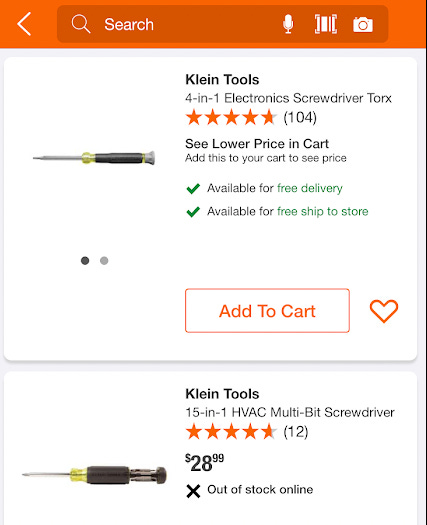

The user clicks on the photo icon in the search bar

They upload a photo or take a photo with their camera

AI identifies the object and searches their catalogue for relevant searches

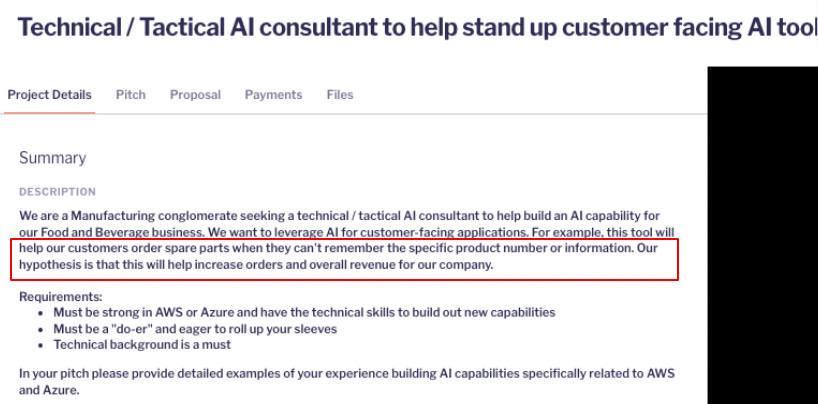

We even spoke with a major manufacturing conglomerate who was interested in building something similar.

They wanted to develop AI capabilities so that customers could order spare parts when they couldn’t remember the specific product number or information.

For companies looking to streamline customer experience and improve operational efficiency, Visual Search is a compelling solution worth exploring.

The ROI of Visual Search is Two-Fold

AI-powered Visual Search offers significant ROI for businesses with large inventories, such as spare parts or equipment retailers, in two ways:

By helping customers find products quickly, thus increasing sales

By reducing in-store operations

Visual Search helps customers find products faster

Visual search has a major advantage over traditional text-based search in that customers don’t need to know the exact terminology to search for a product.

This is particularly common when the buyer may not be well-versed in the lingo of the product.

Furthermore, traditional text-based search systems rely on customers using the correct product names, which can be a major limitation when colloquialisms for equipment (for example "weed whacker" vs "string trimmer") are used interchangeably.

How Visual Search reduces in-store operations

The benefits extend to operations as well.

When Home Depot implemented Visual Search, they found that a significant amount of usage came from in-store associates trying to identify parts customers brought into the shop and locate them on the shop floor.

It’s not just customers using Visual Search—employees also rely on it to better assist shoppers.

This two-fold ROI from visual search makes the business case for implementing it compelling.

As the Senior Director of Management Platforms at Home Depot, Shawn Coombs commented:

How to Implement Visual Search

If you’re looking to implement visual search yourself, there are 3 main options you have:

Integrate with pre-existing SaaS solutions

Integrate with pre-trained models

Fine-tuning, where you customize a model using your own product images to improve its accuracy and relevance for your specific catalog

Our general recommendation is to try 2), then 1) then 3), as this progression allows businesses to explore less expensive and quicker solutions first before moving to more specialized and customized options.

Option 1: Integration with pre-existing solutions

There are a significant number of companies that offer this type of technology out of the box.

For example, JC Penney integrated with Slyce and Macy integrated with Cortexica, both of which were major computer vision companies before being acquired.

Home Depot launched their initial version of Visual Search with Partium in 2020.

It’s worth noting that many of these integrations happened before the explosion of ChatGPT in 2022.

It also appears that many of these companies eventually choose to develop these capabilities in-house.

In a press release in 2022, Home Depot announced that their tech team decided to build their search capabilities from scratch after not being able to find a suitable off-the-shelf solution.

Demos are free, so it’s worth scheduling a few just to see what their capabilities look like.

Option 2: Integration with pre-trained models

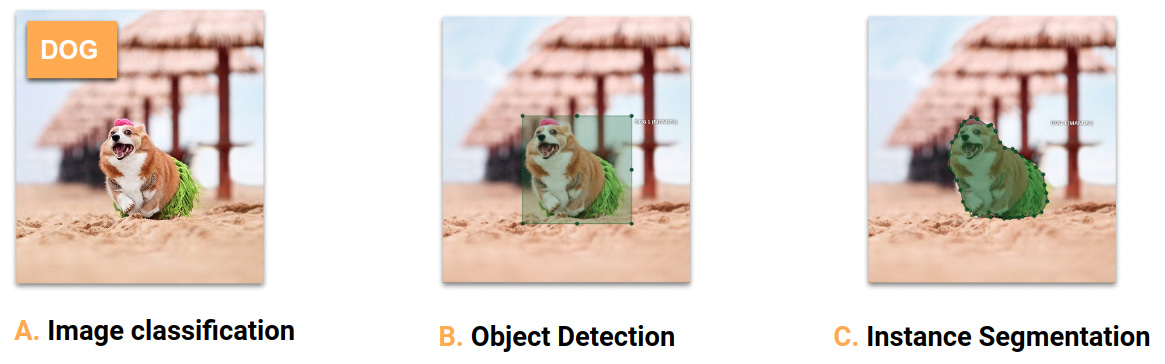

The second and most recommended option is integrating with pre-trained models.

Generalist models like ChatGPT Vision natively already have object detection capabilities. This can then in turn be used to determine the right keywords to search for.

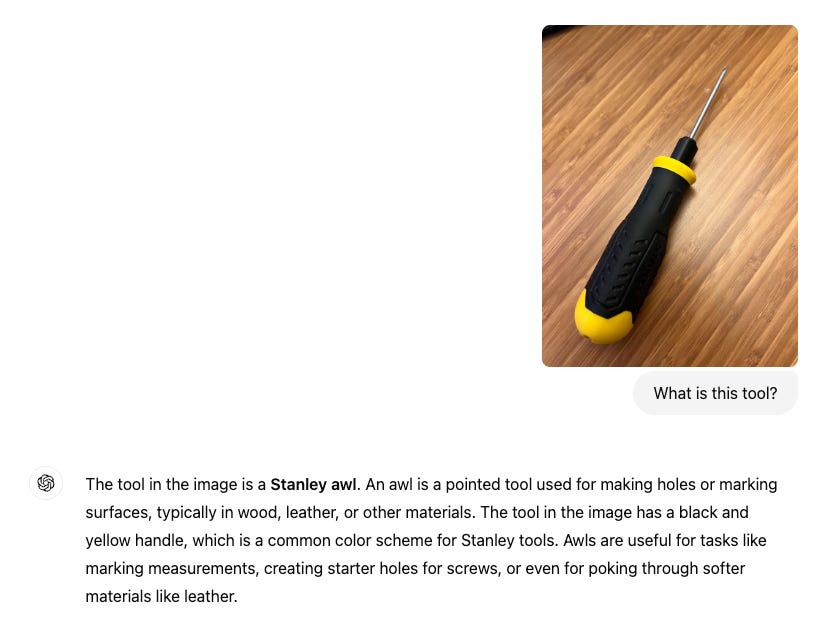

For example, if I send an image of a tool to ChatGPT, it recognizes the color, brand, and exact type of tool it is.

However, notice that when we passed the same picture into Home Depot’s search, it actually misidentified it as a screwdriver instead of an awl.

Pre-trained models recognize a surprisingly large number of products already, and it’s very possible that an integration with one of these models alone is enough to implement Visual Search.

For more complex product catalogs, specialized object detection models like YOLO, ResNet, or EfficientNet may be required. These models are designed to handle a wider variety of products and provide higher accuracy for niche or proprietary items.

Additionally, services like Google Cloud Vision and AWS Rekognition offer scalable solutions for large businesses.

Option 3: Fine-tuning a model

The last option would be to fine-tune a model on your own products.

Fine-tuning is typically necessary when a business's product catalog contains unique or niche items that pre-trained models might not recognize with high accuracy.

By training the model on custom data, businesses can achieve more precise search results.

With this option you would collect a substantial amount of high-quality images of each of your products, with photos taken from different angles, lighting conditions, and variations in packaging or appearance.

Then each would need to be labeled with the correct SKU, product name or category. There are services that exist to do this.

You would choose a pre-trained model that is well suited to the task, as different models are great for recognizing different objects.

Once the images are labeled, the pre-trained model is fine-tuned using your specific dataset to improve accuracy for your products.

Testing and validation happens on a separate dataset that hasn’t been used for training to assess its performance and accuracy. If it performs well, it would finally be rolled out.

Note however, fine-tuning is the most resource-intensive option, but it offers the highest level of accuracy and customization, making it ideal for businesses with highly specific or proprietary products.

Which Implementation Strategy Should You Choose?

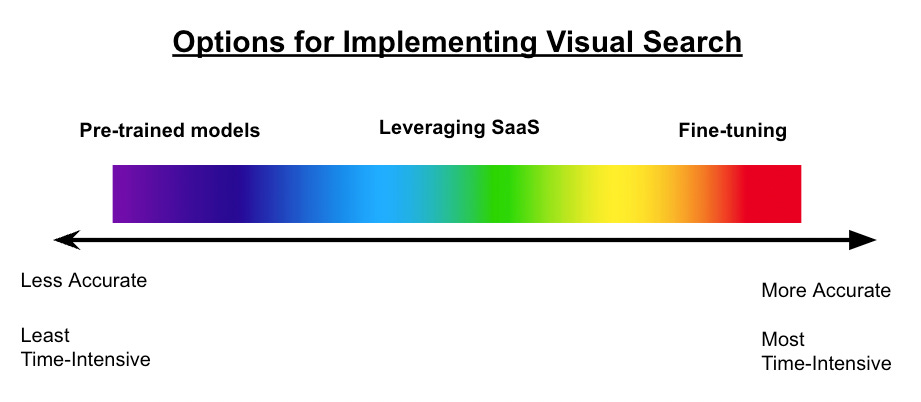

We would generally recommend trying option 2: integration with pre-trained models first, option 1: integrating with pre-existing solutions second, and option 3: fine-tuning third.

At a high-level, the reasoning for this order can be visualized in the spectrum below.

Pre-trained models are the least accurate, but also the least time-intensive to implement. SaaS is in the middle, and fine-tuning is at the other extreme.

Starting with pre-trained models helps de-risk the project as you commit the least amount of resources, while still potentially getting acceptable results.

Pre-trained models also already perform quite well for many items, and their performance will only improve over time. As we saw above with the Stanley awl example, it actually performed better than Home Depot’s native search.

It also scales well as many of the pre-trained cloud solutions would comfortably handle enterprise volumes of daily searches.

Fine-tuning can be very costly as the data labeling and preparation can take quite a lot of time. Furthermore, if you ever add new products and have an expansive catalogue, you would need to repeat this process for every new item you add, which adds more operational burden, so it doesn’t scale well.

Out-of-the-box solutions can work well, however it can be expensive, and still potentially miss some searches.

So in summary:

If pre-trained models work well → Go with Option 2: Pre-trained models

If your catalog contains proprietary, unique, or highly detailed products → Go with Option 3: Fine-tuning

If your company lacks in-house engineering capabilities → Option 1: Go with pre-built SaaS

Final Thoughts

Visual search is one of the highest ROI AI initiatives you can consider incorporating in your business.

If you decide to commit resources down this path, follow our recommendation of using pre-trained models first, then SaaS, then fine-tuning to de-risk the project.

💡 Need Help with AI Implementations?

Feel free to contact us at our website here for a free 30-minute consultation.

If you know someone who needs help with AI, refer them to us and receive a $1,000 award.

And if you liked this article, follow us on LinkedIn, Twitter, and Instagram for daily, revenue impacting AI content.

Our company LinkedIn is here.