SLMs vs. LLMs: Which Should You Choose?

Size matters...for model selection

Executive Summary

Small Language Models (SLMs) are often regarded as ideal for 'narrow' tasks. However, the definition of 'narrow' in AI is much more restrictive than what most people assume.

SLMs can have hidden integration challenges — such as the need for prompt adjustments — which can delay timelines.

SLMs are most effective for tasks aligned with their benchmarks or under strict resource constraints. For most clients, however, starting with an LLM proves more efficient, reducing experimentation time and speeding up results.

A client recently asked me: should they use a Small Language Model (SLM) or a Large Language Model (LLM) like ChatGPT or Claude for their AI project?

They wanted to train the model on their enterprise data, which they felt was a small, focused domain.

Naturally, they wondered if an SLM might be a more efficient and cost-effective choice. Here are my thoughts.

What is the difference between an SLM and an LLM?

First, let’s clarify the difference between an SLM and an LLM.

The terms "small" and "large" refer to the number of parameters in the model—numerical values which the model adjusts during training to understand language patterns from large datasets.

Models with smaller parameter sizes (SLMs) are designed to require less computational power, time, and cost.

DistilBERT, for instance, is 3.5x faster to pre-train than its larger counterpart, BERT.

With this in mind, let’s break down some of the assumptions in the client’s statement.

Assumption 1: SLMs are better for narrow tasks

It’s often said that SLMs are better suited for narrow or specific tasks.

However, 'narrow' in the context of AI benchmarks is often far narrower than most real-world use cases, creating a gap between expectations and reality.

For instance, the performance of DistilBERT—an SLM—is frequently cited as retaining 97% of the performance of BERT, an LLM.

This claim is based on the GLUE benchmark (General Language Understanding Evaluation), a suite of nine tasks testing natural language problems like:

sentiment analysis

text classification

question answering

measuring text similarity.

However, these tests are often done on sentences, rather than documents required in enterprise settings.

It also does not guarantee similar performance on complex tasks involving ambiguity or requiring multi-step reasoning, as these are not included in the benchmarks.

While SLMs might work well for narrower use-cases, the scope of most client requirements extend beyond the definition of “narrow” used in AI benchmarks.

Assumption 2: SLMs are better for specific domains

The client also assumed that training an SLM on internal documents would give it a performance edge over generalized LLMs in their specific domain.

Surprisingly, this assumption doesn’t always hold true.

A recent paper comparing domain-specific models like BloombergGPT—a financial language model trained on extensive financial data—with generalized LLMs like GPT-4 revealed striking results.

BloombergGPT performed well on tasks such as sentiment analysis of financial documents.

However, GPT-4, despite not being trained specifically for finance, outperformed BloombergGPT across many other financial tasks.

This includes areas where domain expertise might be assumed to give BloombergGPT an advantage.

The findings suggest that larger model size, broader pre-training, and advanced architectures enable generalized LLMs like GPT-4 to perform effectively even within specific domains.

The Hidden Cost of Using SLMs

SLMs also come with hidden integration challenges.

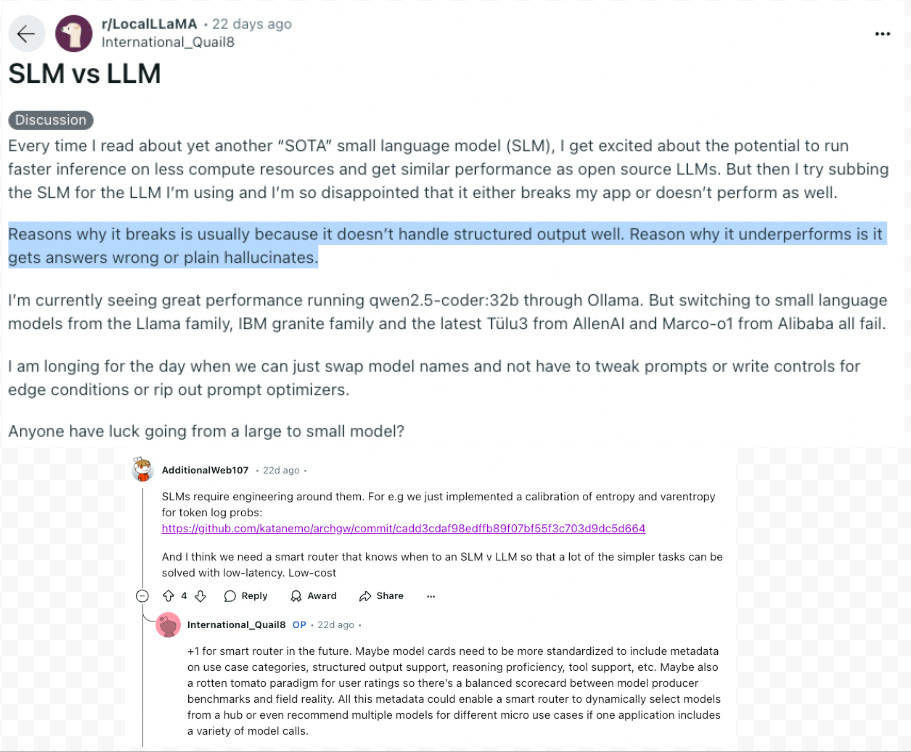

One engineer on Reddit documented their experience switching to an SLM and highlighted several unexpected roadbumps.

Questions that were answered properly before suddenly broke, bugs appeared, and prompts had to be rewritten to account for edge cases.

This added significant complexity to their workflow, delaying the project.

Another critical difference is the tone and conversational quality of models.

Many users appreciate the polished, human-like responses from LLMs like ChatGPT and Claude, which often feel more natural and engaging, which can be harder to replicate in SLMs.

This becomes especially noticeable in customer-facing applications.

So When Should You Prefer an SLM over an LLM?

There are still many scenarios where an SLM might be the better choice over an LLM. These include:

When the task closely aligns with the benchmarks the SLM is optimized for.

If you face computational limitations, such as requiring all processing to happen on-device without access to cloud-based computation.

When tight timelines and budgets make pre-training a smaller, specialized model the more practical option.

That said, for most of the clients I work with, these constraints are rare.

I often recommend starting with an LLM, as it can streamline the process, reduce experimentation time, and accelerate results from the outset.

💡 Need Help with AI Implementations?

Feel free to contact us at our website here for a free 30-minute consultation.

If you know someone who needs help with AI, refer them to us and receive a $1,000 award.

And if you liked this article, follow me on LinkedIn for daily, revenue impacting AI content.

Our company LinkedIn page is here.