Why Did Air Canada's AI Chatbot Hallucinate Responses?

An engineering perspective on the airline's AI debacle

Executive Summary

Air Canada’s customer support chatbot hallucinated wrong responses, costing the airline money and bad press.

This issue was likely caused by inconsistencies between what the bot was trained on and their official policies on their website.

AI chatbot projects can still have positive ROI, as evidenced by successful implementations at Bank of America and Klarna. But these initiatives must be executed with diligence to avoid Air Canada’s mistakes.

This is every AI enthusiast’s worst nightmare.

Earlier this year, Air Canada was sued over incorrect answers provided by its AI-powered customer support chatbot.

A passenger asked the chatbot about funeral travel discounts.

The bot mistakenly said they could purchase the ticket now and claim the discount within 90 days.

Satisfied, the passenger went ahead and booked the ticket.

But when they tried to claim the reimbursement after returning, they were told the bot was wrong, the discount had to be requested before booking, and their claim would not be honored.

The case went to court where Air Canada tried to argue that the chatbot was a “separate legal entity responsible for its own actions.”

The court rejected that defense and ruled in favor of the customer.

They ordered the airline to reimburse the customer a partial refund of $650.88 in Canadian dollars (about $482 USD) off the original fare of $1,640.36 CAD (or $1,216 USD).

They also had to pay for additional damages to cover interest and court fees.

In today’s article, we will look at this incident from an engineering perspective.

We discuss reasons why its bot hallucinated its bereavement policy response, and how you can avoid this in your own chatbot implementation.

Possible Reasons for Hallucination

We could not find any official comment from Air Canada regarding technical details on why their bot hallucinated.

The airline appears to have quietly disabled the feature.

However, we know from court documents that the chatbot linked to a page with the right bereavement policy, but just gave the wrong answer in their response.

Based on this description, we can take educated guesses as to how this hallucination happened.

1. Inconsistent Data

The main reason Air Canada’s chatbot gave the wrong information is likely due to inconsistent data between what the bot was trained on and the official policies on their website.

If you consider a high-level architecture of a chatbot below, there are four main components:

Frontend - the look and feel of the app that users interact with

Query engine - the “brains” of the app that synthesizes the response

Vector database - where external data is stored

Data pipeline - where your data is transformed for AI to ingest

The data pipeline is one of the most crucial parts of a chatbot, since this is the information the bot is trained on.

If the data is bad, then the chatbot will be bad too.

Hallucination issues often start within the data pipeline.

That’s where the chatbot could’ve faced the following challenges:

Contradictions - ingesting documents containing contradicting bereavement policies

Outdated info - not properly pulling in the latest bereavement policy and displaying an outdated one

Caught between updates - the customer may have caught the chatbot right before it was able to update its bereavement policy

Noisy data - the data could have irrelevant information that affects the accuracy of its responses

Inconsistencies - the documents the bot ingested were not the same as the documents published in their customer support pages

If the court filings say the bot was able to link to the right documents even though it gave a wrong response, this indicates a likely data consistency issue.

The web pages were updated, but the bot was either not trained on it, or was trained on a different bereavement policy.

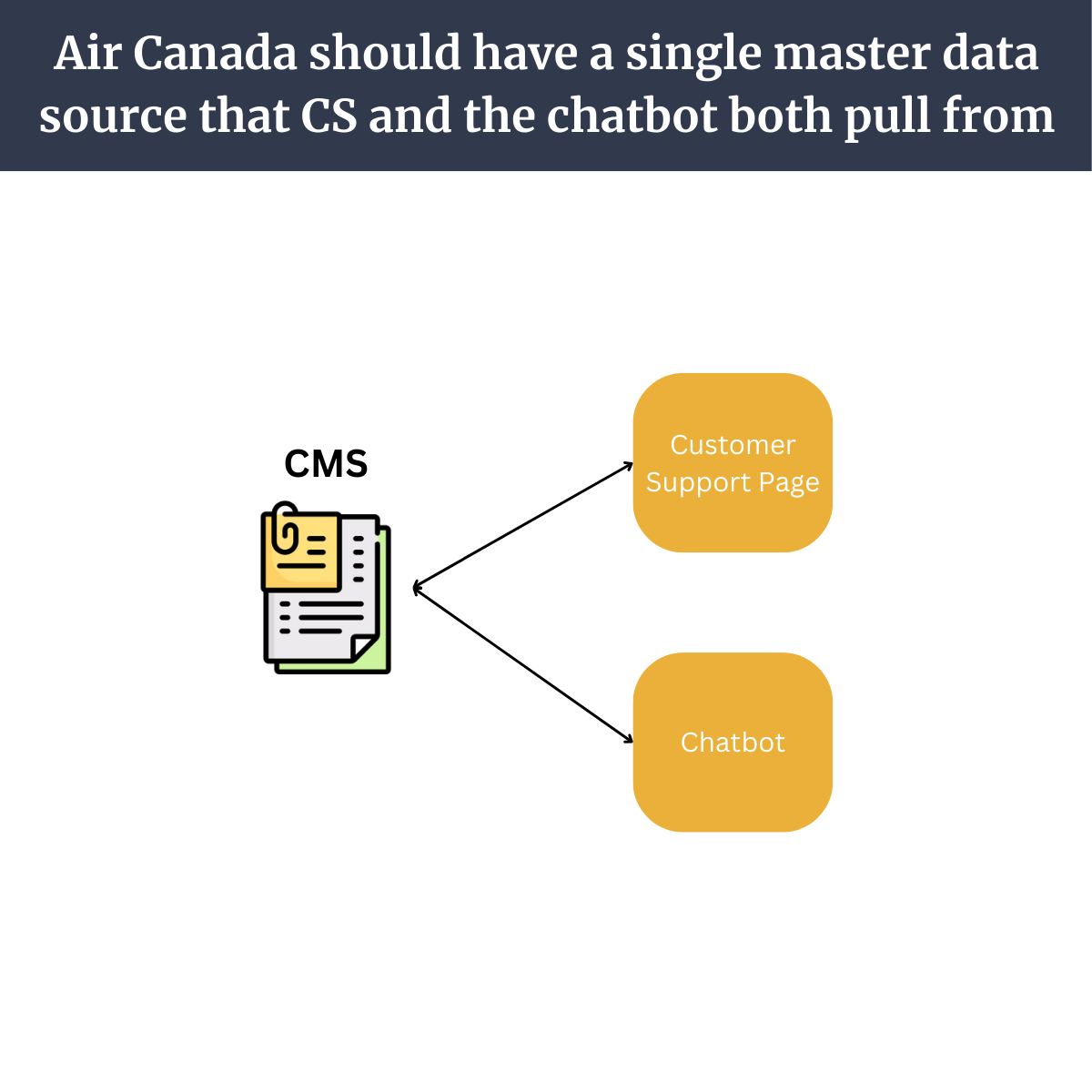

The solution is to ensure that the data the bot consumes is the same as what they have on the webpage.

For example, Air Canada could either:

use a single CMS for both the bot and the Customer Support page or

treat the website information as the master data source, and train it exclusively on their website’s content so the two never fall out of sync.

2. Incorrect Chunk Sizing

Although their issue is almost certainly an issue with data consistency, hallucinations could happen for a variety of other reasons - a common one being wrong chunk sizing.

When AI ingests your custom data, it breaks your documents into smaller “chunks” that it processes individually.

The way your chatbot “chunks” your documents can have a big effect on the accuracy of responses.

For example, consider two different ways we could break up the bereavement policy:

Chunking Option 1:

Chunk 1: Please contact us by phone

Chunk 2: to request a bereavement fare.

Chunk 3: We will ask you to provide us with

Chunk 4: the name of the dying or deceased family memberChunking Option 2:

Chunk 1:

Please contact us by phone to request a bereavement fare. We will ask you to provide us with the name of the dying or deceased family member and your relationship to them, as well as:

The name of the hospital or residence, including the address and phone number and name of attending physician; or

The name, address and phone number of the memorial or funeral home, including the date of the memorial service or funeral

Chunk 2:

Within 7 days of returning from your bereavement travel, please send an email to Bereavement@AirCanada.ca with your booking reference in the subject line.

To the email, attach one of the following supporting documents as proof of imminent death or bereavement.When the LLM is looking for relevant chunks to generate the response with, Option 2 will produce the most accurate response as it contains complete context on the bereavement policy.

Option 1 has chunks in the middle of sentence, and thus doesn’t capture the complete idea, making it difficult for the LLM to determine its relevance.

This highlights two important points about chunking:

Chunk sizing involves trading-off accuracy and context. The bigger the chunk, the more context that is retrieved, but irrelevant information could also get pulled in. The smaller the chunks, the more accurate your answers are, but the less context there is. Consider how important context is compared to accuracy for the tasks your bot is trying to solve.

You want to chunk at the end of complete sentences, paragraphs, sections, and thoughts. Cutting chunks off in the middle could lead to information getting lost.

3. Insufficient Monitoring and Testing

Third, if the data pipeline is completely working, it could be changes to the query engine’s retrieval mechanism that introduces hallucinations.

In the last section, we discussed how chatbots break documents into smaller chunks, then retrieves the most relevant chunks to synthesize a response.

It could be that the bot’s query engine correctly pulled in all of the chunks from the bereavement policy, but a change to the retrieval logic caused it to start pulling in different chunks.

An engineer could’ve unknowingly introduced this breaking change.

For a chatbot of this scale, it is likely necessary to have continuous automated testing where the same set of benchmark questions are fed into new versions of the bot.

Only if its responses remain consistent after changes are introduced should the bot be deployed.

4. Wrong chatbot architecture

Lastly, these types of hallucination issues could happen if the chatbot wasn’t architected properly.

For example, a question about bereavement policies should only take relevant information from a single page: their bereavement policy, and no chunks from anywhere else.

This could be implemented with a two-tier structure to their bot, where the bot first determines which relevant document(s) it should pull context from before delving into the specific text.

If the chatbot was designed in a way where every document was the same, it could pull in context from irrelevant documents where grace periods are also mentioned, and get it mixed up with the bereavement policy’s grace period.

Should You Still Invest in AI Chatbots?

Some have quipped that incidents like this should discourage organizations from pursuing AI chatbot projects altogether.

We reject this notion.

Chatbot projects can deliver ROI, but they need to be executed with care.

There is precedence for successful AI chatbot implementations driving significant savings.

For example, Bank of America’s Erica AI chatbot answers 1.5 million queries daily, and has contributed to revenue growth through cross-sold services.

In another case, Klarna, a Swedish Fintech company announced that its AI chatbot implementation did the equivalent work of 700 full-time agents and will drive $40 million in profits in 2024.

Air Canada’s case shows that chatbot implementations are not as simple as dumping data into AI.

We recommend the following advice to de-risk your chatbot projects:

Data Quality - Audit your data tech stack to make sure it’s consistent and trust-worthy before building any AI applications on top of it

Scoping - Tightly scope your chatbot to specific functions rather than opening it up to any questions to reduce chances of errors

Disclaimers - Consider warning customers that the information the chatbot provides may not be accurate, as the lack of a disclaimer opened up Air Canada to legal risk

Investment - Understand that chatbot implementations are investments. Ensure that the expected pie is large enough to justify the effort.

Managing PR - When it comes to potential customer service and PR issues, don’t be penny-wise and pound foolish. Trying to save a few hundred dollars ended up costing Air Canada a whole lot more.

💡 Need Help with AI Implementations?

Feel free to contact us at our website here for a free 30-minute consultation.

If you know someone who needs help with AI, refer them to us and receive a $1,000 award.

And if you liked this article, follow us on LinkedIn or Twitter for daily, revenue impacting AI content.

Our company LinkedIn is here.